Well October was quite an eventful month - I turned 36, and got married.

I should really post here more often. It’s out of my comfort zone to share “thoughts” that aren’t tangibly related to code etc. But we’re in a unique time. I spun up an internal Chat replacement (the cost of furnishing everyone with a paid subscription far outweights the price of usage based billing), but it also gives me the ability to equip it with novel data sources. This blog could be one of those, and it could provide my kids with future access to my thoughts and mindset when I’m otherwise unavailable, if I could actually commit to writing here with some cadence…

Let’s try it.

One of the things I’ve been using v0 heavily for is generating small, internal utilities. Things like our time tracking, expense logging etc. I need to put these behind some for of authentication, and ended up spinning up a little Rails oAuth server with email whitelisting.

It works a treat; I can just prompt v0 to wrap any apps in the <OidcProvider /> and have prototypes immediately protected.

You could probably use something off the shelf, like auth0, but I wanted an excuse to model out a “magic link” flow with FlowState xD

I came across the “target panic” phenomenon listening to a podcast this week. It’s a condition archers suffer, where the fear of missing the target prevents them from releasing a shot. I think I’ve suffered similar with startups - not wanting to work on the wrong thing. Imagine many others have too.

I’ve been working on flow_state, a small gem for modelling, orchestrating and tracing multi‑step workflows natively with Rails.

Back story

Over the years I’ve built countless iterations on pipelines that:

- Fetch data from third-party APIs

- Blend and transform it (lately with generative AI)

- Persist the result in my own models

They always ended up as tangled callbacks or chains of jobs calling jobs, with retry logic scattered everywhere. When something failed, it was hard to trace what happened and where.

I wanted:

- A Rails-native orchestration layer on top of SolidQueue/ActiveJob

- No external dependencies like Temporal or Restate

- A clear audit trail of each state transition, whether it succeeded or failed

- A conventional and Rails-y way to declare states, transitions, guards and persisted artefacts

The core idea

With flow_state you:

-

Define props

storing metadata required to initialise a workflow.prop :third_party_id, String -

Declare states

state :pending state :syncing_api state :failed_api, error: true initial_state :pending -

Define every possible transition

transition!specifies which states can be transitioned from and to, and validates every move. It prevents race conditions by not allowing transitions between incompatible states. -

Define schemas for persisted artefacts

Artefacts are stored during one transition, for use in another.persist :third_party_response, Hash -

Apply guards

Block a transition unless a condition holds. -

Write your steps as explicit methods

No magic methods or heavy meta-programming.def start_api_fetch! transition!(from: :pending, to: :started_api_fetch) end def finish_api_fetch!(result) transition!(from: :started_api_fetch, to: :finished_api_fetch) { result } end def fail_api_fetch! transition!(from: :started_api_fetch, to: :failed_api_fetch) end

Example: synchronise song data

class SongSyncFlow < FlowState::Base

prop :song_id, String

state :pending

state :picked

state :syncing_api

state :synced_api

state :failed_api, error: true

state :syncing_model

state :synced_model

state :failed_model, error: true

state :completed

initial_state :pending

persist :api_response

def pick!

transition!(

from: %i[pending completed failed_api failed_model],

to: :picked,

after_transition: -> { enqueue_api_job }

)

end

def finish_api!(response)

transition!(

from: :syncing_api,

to: :synced_api,

persists: :api_response,

after_transition: -> { enqueue_model_job }

) { response }

end

def fail_api!

transition!(from: :syncing_api, to: :failed_api)

end

# …and so on…

end- Your jobs simply call

pick!,finish_api!, etc. - Your transitions cause errors if your app attempts to transition from incompatible states, as modelled by you.

- Each call is locked, logged and validated.

- Guards stop you moving on until data is correct.

- Artefacts can be stored between transitions, for later reference.

Getting started

- Add to your Gemfile

bundle add 'flow_state' - Run the installer and migrate

rails generate flow_state:install rails db:migrate - Create your flows in

app/flows, ie.SongSyncFlowinapp/flows/song_sync_flow.rb

I’m using it across two projects at the moment, and it’s made reasoning about complex stepped workflows considerably easier. It’s helped me design workflows that are resilient by default.

I’ve been involved in a bunch of “Industry 4.0” projects over the years, essentially creating trackability through on-the-floor systems (typically in settings like warehouses, construction sites etc).

The choice of implementation has generally come down to: “Are we going to ship a native app?” If so, we’ll use NFC tags; if not, we’ll use QR codes.

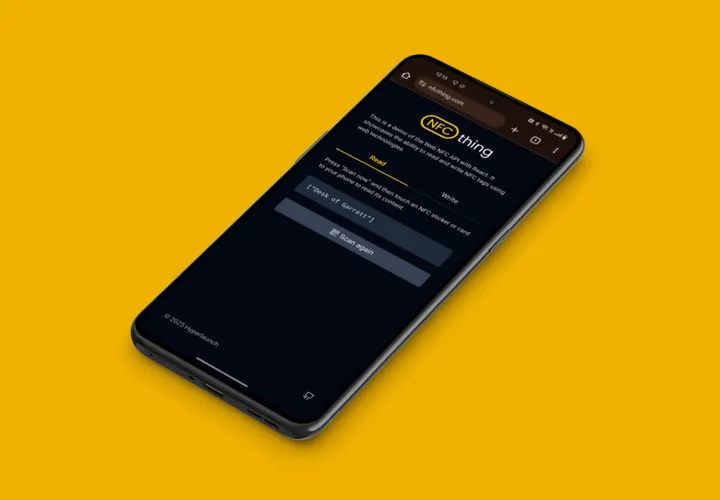

I much prefer to ship web apps for this sort of project, mainly because of the freedom of deployment/lack of gate keepers. So I was super excited when I stumbled across the Web NFC API at the weekend.

So much so, I decided to build NFCThing - a React based SPA for reading and writing NFC tags entirely from the browser.

As you’d expect, Apple are dragging their heels so it’s only usable with Chrome on Android right now. This is fine for my embedded use cases, but wider support would open up a whole world of more creative possibilities.

In terms of build, the source is available here (I wrote a hook around the Reader API that mimics the ergonomics of SWR somewhat). You can try the app out here (in Chrome on an Android device).

As with most of my toy projects, I decided to bundle in some R&D - specifically trying out Bun’s new bundler. This is a development I’ve been eagerly anticipating, and was very happy to put to use. The entire app dev/build life cycle is managed by Bun. It’s generally a beautiful thing, but I did hit a few rough edges:

- The bun tailwind plugin seems to struggle with builds to nested output directories (ie. building to

./distworks fine, but building to./.output/staticresults in none of the used tailwind classes being bundled into the CSS file) - When I was deploying to Vercel with their standard CI/CD, the

index.htmlfile generated frombun run buildwas broken/didn’t match up with what I’d get from running the same process locally; there were no errors thrown, so I ultimately put this down to Vercel’s version of Bun being out of date - Until that gets resolved, I wrote a quick Github action to build the output and deploy it to Vercel as a prebuilt bundle

So the end result is a nice little demo of the Web NFC capabilities, using bun end-to-end, with automated deployments to Vercel.

I’ve been pulling together a dataset of ~4k UK funding rounds. First pass was storing the data into local JSON files, to use as a collection with Astro. But I remembered Stef speaking highly of DuckDB’s ability to read from large, ad hoc data sources - so I thought I’d have a go at loading the json files with it. It’s great! takes millseconds to load the 4k files, and then I can effortlessly query them as though with SQL. Super useful. Removes an entire ETL process for me.

I picked up Golang last year - I shipped an “embedded” app across a few hundred kiosks at DFW airport, and Go’s ability to compile down to a single exe across platforms was a big enabler. But with the 1.2 release of Bun, I’d probably use that instead. Bun’s got the same compilation capability now, comparable performance and is rapidly providing a rich standard library. I also find Typescript more enjoyable than Go (just personal preference). Makes me wonder what the future looks like for Go.

I, somehow, came into possession of an M2 Macbook Pro that only has 8GB of RAM. The low memory makes using OS X thoroughly unenjoyable, so I decided to take a shot at running Fedora via Asahi Linux on it.

It’s actually great; quite consistent with Ubuntu running on my main rig, and so snappy it’s become my primary laptop. I recommend anyone with an aging Apple Silicon laptop try it out. The only frustration I’ve had is that the battery consumption when it’s suspended is bizarrely high.

I’ve really settled into the idea that I want to do a tech startup again. I just don’t yet know what that startup is. But I do know some of the key components I want:

-

A simple starting point that can grow into a platform. I want something that I can ship early, that can be immediately useful, but that can scale up into a multi-faceted platform.

-

A clear business model. It must make money, from day one if possible.

-

Fandom. I want a product people become fans and advocates of. Conferences, merch, people complaining that we don’t have a native app yet. I want people to put our quarterly big reveals in their calendars.

-

No gatekeepers or heavy regulation. I want the freedom to build and ship fast, without waiting on slow-moving approvals.

-

No cold start problem. A single user should get immediate value. No massive, or private, datasets or network required at launch.

-

No friction between borders. we shouldn’t need to write new code to support a new territory.

-

A money maker for users. The easiest sell for a product is a guaranteed profit on investment.

-

Valuable to me. I don’t want to rely on customers for a feedback loop.

-

The ability to scale from grassroots to enterprise.

-

A network effect. Every new user should help spread the product.

I also want to exploit what I already know: LLMs, RAG, semantic search, recommendation engines, barcode scanning, media streaming, eCommerce logistics, finance/accounting. So much IP that I’ve accumulated through Hyperlaunch. I’ve got deep experience with industries like music, fitness, construction, real estate, aviation, and pharma. But music doesn’t beget good outcomes for tech companies, pharma is a closed industry, and I really don’t want to deal with the trades.

‘Lantian’, meaning ‘blue sky’ in Mandarin Chinese […] The similarity between Graber’s given name and Bluesky is purely coincidental; Jack Dorsey had chosen the name ‘Bluesky’ for the research initiative before Graber became involved

How cool is that? The Bluesky CEO’s name actually translates to “blue sky”, by pure coincidence.

I’ve been working on Hyperkit over the past fortnight, and the first release is now live on npm. It’s a collection of headless custom elements/web components designed to simplify building rich UIs with serverside frameworks like Rails or Revel.

It’s more like Headless UI than Shoelace: you bring your own styling.

Here’s an example of a styled sortable list:

<hyperkit-sortable class="bg-white rounded shadow-md w-64 overflow-hidden">

<hyperkit-sortable-item

class="flex items-center justify-between pr-4 pl-3 py-2 border-b border-b-stone-200 data-[before]:border-t-2 data-[before]:border-t-blue-400 data-[after]:border-b-2 data-[after]:border-b-blue-400 text-stone-800"

>

<hyperkit-sortable-handle>

<button

class="cursor-move mr-2 text-stone-400 select-none font-black px-1"

>

⋮

</button>

</hyperkit-sortable-handle>

<span class="text-xs font-medium flex-grow">Item 1</span>

</hyperkit-sortable-item>

<hyperkit-sortable-item

class="flex items-center justify-between pr-4 pl-3 py-2 border-b border-b-stone-200 data-[before]:border-t-2 data-[before]:border-t-blue-400 data-[after]:border-b-2 data-[after]:border-b-blue-400 text-stone-800"

>

<hyperkit-sortable-handle>

<button

class="cursor-move mr-2 text-stone-400 select-none font-black px-1"

>

⋮

</button>

</hyperkit-sortable-handle>

<span class="text-xs font-medium flex-grow">Item 2</span>

</hyperkit-sortable-item>

</hyperkit-sortable>The pull of React, for me, has always been the clarity and DX that comes with colocating a component’s behaviour with it’s markup and styling. It makes a complex front-end way more intuitive/easy to reason with. The counterpart in Rails is Stimulus, which comparatively feels disconnected - relying on magic incantations of data attributes to apply behaviour. There’s a lack of traceability with Stimulus.

But for situations like a stylable select, or click to copy component, I don’t want to be reaching for React. Thus, Hyperkit - custom elements for UX patterns like sortable lists, repeating fieldsets and even calendars. Because Hyperkit uses custom elements, they’re just plain HTML tags, with all the behaviour encapsulated in the tag. No need to write a line of Javascript.

And if you use it with Phlex, you can build end-to-end frontends in pure ruby 🤌

I’ve been working on some embedded touch screen projects recently, SPAs built with React. A requirement recently came up to enable D-Pad navigation of the UI - with the entire functionality of the app needing to be accessible.

Making features designed for the capabilities of a full-blown touch screen fully accessible with a 5 button controller isn’t exactly trivial, especially with complex components like dropdowns and a virtual keyboard in the mix.

I ended up undertaking some R&D to come up with a solution. Based on convention, a “virtual cursor” seemed to be the best solution - making every element of the UI accessible without any additional design consideration. I’m sure it’ll have some further utility, so I’ve wrapped it up as a React hook. It’s headless, so you can style the cursor however you like.

It’s currently quite specific in scope: designed for non-scrolling, full screen kiosk apps; there are a couple of clear enhancements that I’ve already identified. I think overtime this could prove to be a great accessibility option for these sorts of kiosk implementations.

Check it out: @hyperlaunch/use-virtual-cursor

It’s been a while since I shipped a client project with Hyperlaunch, so I’m super excited to release the new Space Baby app for U2. I’m even more excited to be able to say that I’m the first developer in the world to develop an app integration with The Sphere.

What is it?

It’s a companion app for U2’s residence at the Sphere. Whenever U2 content is playing on the “exosphere”, the app goes into “broadcast mode”, allowing fans to tune in and listen to the audio accompaniment. This includes the Space Baby speaking, so I had a fair bit of technical wrangling to make sure it’s millisecond accurate - essentially pre-downloading the entire clip and then seeking to the right point, to avoid latency issues with streaming.

Outside of broadcasts, there’s an ambient soundtrack produced by Brian Eno.

When a broadcast starts, we recieve a notification from the Sphere’s internal tooling to update the currently playing clip - and my stack then pushes a socket to all active users to update the state in real time.

Built with…

- Astro for build

- Pusher for web sockets

- Mux for streaming the ambient soundtrack

- Turso for persisting the Sphere notifications

- React and Tailwind for UI

- Vercel for CI and infra

You can read the full case-study over at Hyperlaunch.

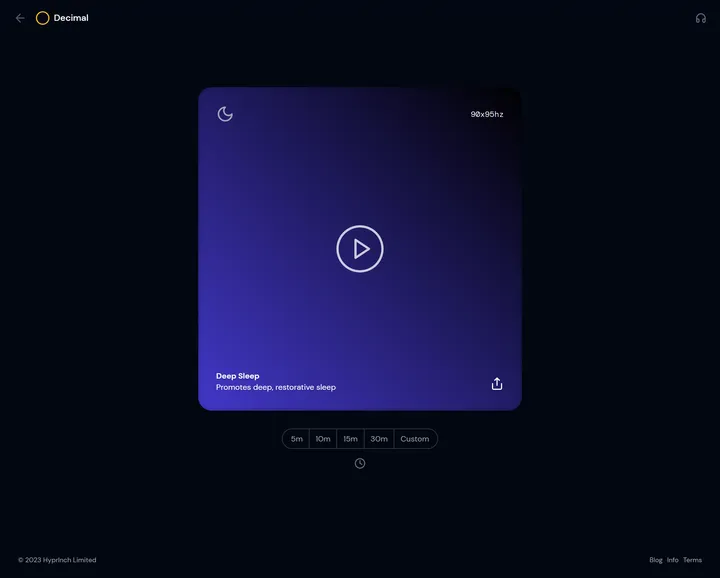

It’s been a while since I indulged in a pure piece of R&D, and the list of tools I’ve been keen to play with has grown pretty lengthy. I finally managed to carve out a week to get down to some serious experimentation and the result is Decimal, a free Binaural Beats generator delivered as a PWA.

I’ve been using Binaural Beats ever since I heard about them in the Huberman Lab episode on concentration, Focus Toolkit: Tools to Improve Your Focus & Concentration. I’ll add that I’ve also found them incredibly effective as a sleep aid.

While the concept works for me, the existing apps are generally pretty poor; ad-laden and lacking in terms of UX. With Decimal, I wanted to deliver an app that users can love, without the ad and tracking bloat that comes with so many apps. My main goals were:

- Exceptional speed and performance

- Joyful to use - nicer than any existing apps

- No invasive ads or tracking

I hope I’ve achieved all three. The end result is what I’ve started to refer to as a toy; it’s fun to use, singular in purpose and has no intention of delivering any commercial outcomes. A true “small web” project.

Tech wise, the app is built with Astro, with some React islands peppered throughout - for interactive components like the player controls. I dropped in Million.js because… well why not?

The biggest epiphany for me was using Nanostores to share state between disparate React components - like the main player and persisted mini player in the header. Nanostores make this super easy, and the resulting code is far clearer than the eqivalent would be with a Provider/Context hook. I’ll definitely be using this approach in future.

The tones themselves are generated with the Web Audio API, so you can switch from the suggested presets to entirely custom frequencies. As part of my three goals above - I was eager to get lock screen controls functional on mobile. The Media Session API, however, doesn’t have any awareness of Web Audio instances, so I resorted to a hack: playing a looping mp3 of silence, and then binding to the Media Session callbacks for that <audio /> to control the Web Audio output.

All of this is made feasible with Astro’s <ViewTransitions />. I can just append transition:persist to any component to have it persist in the client between page navigations - so there’s no loss of audio playback etc as you navigate around. You get some fantastic transitions too - with no implementation overhead.

Astro is increasingly looking like a framework/build tool I can go all in on; the life cycle is super intuitive and ability to drop in piecemeal UI framework components is incredible. The default 💯 page speed scores are pretty compelling, and because the app compiles to static and generates the audio entirely in the client, it costs next to nothing to run.

Tech aside, I’ve also done some experimentation with using GPT 4 and DALLE-3 to generate blog posts that I hope will deliver some educational and actionable information for new comers to binaural beats. I really struggle to pen that sort of content myself, so this was a novel way to overcome a writer’s block; hopefully some craftmanship at the the prompt level pays off in the output. These posts will be rolling out on the blog over the next couple of months.

Anyone who has worked with engineers knows that impl. time is not a pure function of complexity, but is greatly affected by their interest in the work.

This tweet by Lea Verou really resonated with me. I’ve experienced this in myself and other developers I’ve collaborated with. IMHO, a “10× engineer” isn’t born purely of talent, but of a combination of domain understanding and vested interest in the problem space.

corporations are nothing more than a collection of contracts between different parties primarily shareholders, directors, employees, suppliers, and customers.

Giving the blogging thing another go. Super simple stack - but hopefully that means I’ll keep it updated:

- Astro for dev/build

- MDX for content

- Swup for client side page transitions

- Tailwind for styling (with @tailwdindcss/typography)

- Space Grotesk for display, Poppins for body

- Deployed to Vercel

- As always, photo of me by Rachel Carney

I’ll definitely refine the design over time, but let’s see how well I do at keeping this thing up-to-date for now…